The Quantum Leap Meets AI Ethics

Unpacking the Next Era of Computation and Human Responsibility

"We can’t just build the fastest machine; we must first ensure it serves the deepest human needs." — Nadina D. Lisbon

Hello Sip Savants! 👋🏾

The pace of technological advancement is dizzying. Just last week, we saw news about a major quantum computing breakthrough with long-lived qubits¹. A huge step toward realizing computing power that will reshape everything, including AI. This acceleration demands we move beyond simply marveling at the tech. We need a parallel focus on the ethical guardrails and human impact, especially as powerful tools, like medical AI, become ubiquitous³. Are we building a future we actually want?

3 Tech Bites

⚛️ The Quantum Qubit Endurance Boost

Scientists have significantly extended the operational life of qubits, the fundamental units of quantum information¹. Longer coherence times mean fewer errors and more reliable quantum calculations. This isn’t just an engineering feat; it’s the foundation for complex AI models (like advanced molecular simulations for drug discovery) that were previously impossible.

⚕️ The AI Transparency Gap in Medicine

As AI-powered medical devices become common, a crucial question arises: who is paying the doctors to use them?³ New reports highlight the alarming lack of transparency regarding industry payments to healthcare providers using AI devices. Without clear disclosure, potential conflicts of interest could influence clinical decisions, placing patients at risk and eroding trust in technology.

🌐 The Human-Centric AI We Want

The global community is emphasizing the need for an ethical and human-centered approach to AI governance². This isn’t just about avoiding military misuse; it’s about proactively designing AI to promote social good, respect human rights, and ensure equitable access. It means moving beyond a “move fast and break things” mentality and prioritizing long-term societal well-being.

5-Minute Strategy

🧠 Your 5-Minute AI ‘Conflict Check-Up’

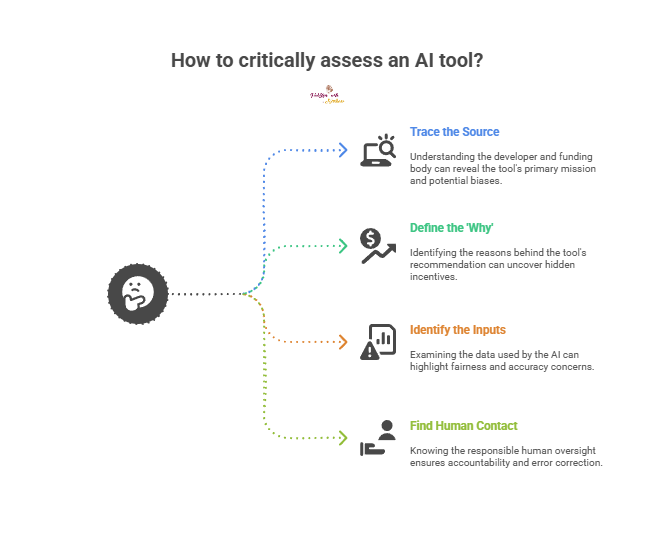

Given the opacity around AI in professional fields, let’s critically assess any AI tool you plan to use or purchase in a professional capacity.

Trace the Source

Who is the core developer or funding body behind this AI solution? A quick web search can reveal their primary mission and investors.

Define the ‘Why’

Why is this tool being recommended or pushed?

Is it purely efficiency, or could there be a financial incentive you’re unaware of?

Identify the Inputs

What data is the AI relying on, and how does that affect its fairness or accuracy? Look for documentation on training data biases.

Find the Human Contact

Who is the person or team responsible for oversight and error correction?

A well-governed AI system always has a clear human point-of-contact.

1 Big Idea

💡The Responsibility that Scales with Power

The intersection of quantum computing advancements and generalized AI heralds an era of nearly unimaginable power. This isn’t hyperbole; the quantum breakthrough with long-lived qubits¹ means that we are rapidly closing in on machines capable of cracking current encryption methods and accelerating scientific discovery at a scale that will fundamentally shift global power structures. The potential benefits, like curing diseases, and solving climate models are immense.

However, as computation scales exponentially, our ethical responsibility must scale even faster. The UN is right to push for human-centered AI², because the decisions being coded into today’s algorithms will be magnified by tomorrow’s quantum power. Consider the lack of transparency in medical AI payments³: if conflicts of interest are opaque now, imagine the consequences when AI determines life-saving treatments with quantum-level precision based on potentially skewed commercial inputs. The faster the machine, the more catastrophic the unchecked bias or conflict.

We must actively resist the seductive pull of speed and efficiency for their own sakes. The true measure of technological success shouldn’t be FLOPS (Floating Point Operations Per Second), but rather TRUST. This requires developers to embed human rights and fairness into the very architecture of their systems, and it requires users and regulators to demand transparency. Not just about how the AI works, but who benefits from its deployment. We must see this new era not as a technological race, but as a moral imperative: to ensure that the power of quantum-enhanced AI uplifts all of humanity, rather than concentrating influence and risk in the hands of a few.

If this made you pause and think about the tech you use daily, please share it! The best way to build a better future is by having this conversation with more people.

P.S. Know someone else who could benefit from a sip of wisdom? Share this newsletter and help them brew up stronger customer relationships!

P.P.S. If you found these insights valuable, a contribution to the Brew Pot helps keep the future of work brewing.

Resources

As Medical AI Use Grows, Industry Payments to Providers Remain Opaque (Referencing a STAT News report from 2025: Artificial intelligence devices and the Open Payments conflict of interest)

Sip smarter, every Tuesday. (Refills are always free!)

Cheers,

Nadina

Host of TechSips with Nadina | Chief Strategy Architect ☕️🍵

The 5 minute checklist is very helpful. Thanks

Quantum computing has been a long time coming and like you mentioned would make AI take leaps and bounds forward. Thanks again for the amazing and informative read.