The Human Firewall

Why Our Critical Thinking is the Ultimate Security Patch

“We often look for a software patch to fix a vulnerability, but the most important security update is the one we install in our own minds: skepticism.” — Nadina D. Lisbon

Hello Sip Savants! 👋🏾

Are you out shopping with the clock ticking? Take 45% off all TechSips shop items, valid until January 5th. Joy, with no deadlines.

✨ Use Code: SIPJOY45 ✨

We are closing out 2025 with a reality check. As we rush to integrate AI agents into every browser and workflow, the creators of these very systems are reminding us that the technology has hard limits. Distinguishing a helpful user instruction from a hacker's malicious command is not just a bug; it's an architectural challenge. This week, we explore why the solution isn’t just better code, but better human judgment.

3 Tech Bites

🔓 OpenAI: "Always Vulnerable"

OpenAI has admitted that AI browsers may always be vulnerable to prompt injection attacks [1]. Because LLMs mix "instructions" (what you tell it to do) with "data" (the content it processes), malicious hidden text can trick them on websites. This confirmation shifts the focus from "fixing" the bug to managing the risk through human oversight.

📉 Gartner's "AI-Free" Prediction

In a striking forecast for 2026, Gartner predicts that 50% of global organizations will soon require "AI-free" skills assessments [2]. As reliance on GenAI grows, companies are worried about the "atrophy" of critical thinking. The ability to solve problems without an algorithm is becoming a premium asset.

👩🏫 Teachers Training the Trainers

NBC News reports on the growing trend of teachers and students "co-learning" to train and fine-tune AI interactions [3]. OpenAI has released a new course designed specifically to help educators navigate this shift. The focus isn't just on using the tools, but on teaching students (and teachers) how to guide, correct, and "train" the models to be safer and more accurate.

5-Minute Strategy

🧠 The “Analog Anchor” Technique

Combat the “cognitive atrophy” Gartner warns about by ensuring you lead the AI, rather than following it. Use this technique for your next complex task:

The Anchor

Before opening ChatGPT or Claude, grab a sticky note. Write your top 3 expected points, solutions, or unique angles for the task at hand. Do this entirely offline.

The Prompt

Run your prompt as usual.

The Gap Analysis

Compare the AI’s output to your sticky note. Did the AI miss a subtle nuance or local context you wrote?

If yes, that missing piece is your “Human Value Add.”

If no, your anchor was too generic; push your thinking deeper next time.

1 Big Idea

💡 The Renaissance of "AI-Free" Thinking

For three years, "prompt engineering": how to converse with machines has consumed us. But the pendulum is swinging back. Gartner’s prediction that half of all major organizations will soon require “AI-free” skills assessments is a massive signal [2]. It suggests that while AI proficiency is necessary, human proficiency is non-negotiable.

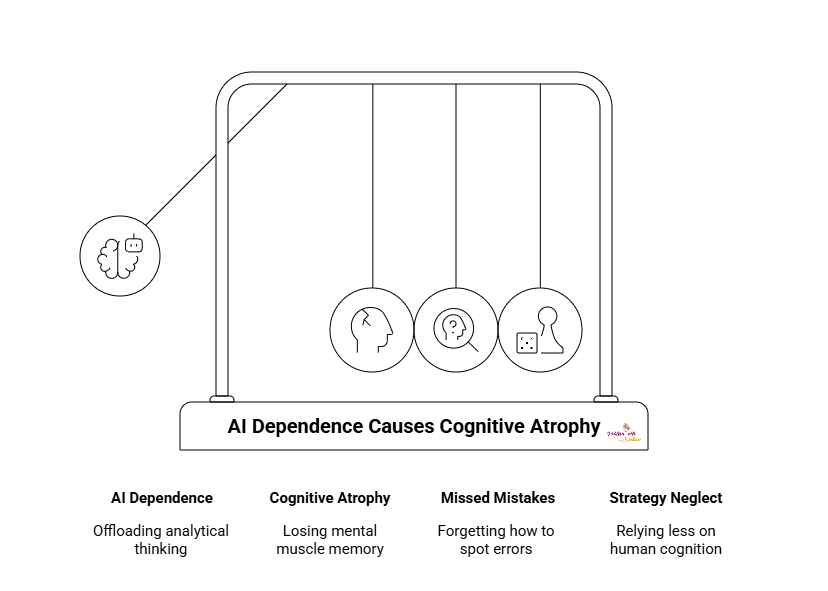

The risk isn’t just that AI makes mistakes (which we know it does); the risk is that we forget how to spot them. If we offload all our analytical thinking to an LLM, we suffer from what experts call “cognitive atrophy.” We lose the mental muscle memory required to deconstruct a complex problem, write a persuasive argument from scratch, or debug code without a copilot.

This doesn’t mean we should stop using AI. It means we need to treat it as a bicycle for the mind, not a replacement for our legs. The most valuable employees in 2026 won’t just be the ones who can use ChatGPT the fastest; they will be the ones who can pause, look at the AI’s output, and say, “That doesn’t look right,” because they still know the first principles of their craft.

We are entering an era where “unassisted” work might become a badge of honor, or at least a verified credential. The future of work involves a hybrid approach: using AI for speed and scale, but relying on unassisted human cognition for strategy, ethics, and final judgment. The “AI-free” skill set is your insurance policy against a hallucinating machine.

Next time you’re about to prompt an AI, take 30 seconds to outline your thoughts on paper first. Keep those synapses firing! Share this with a friend who loves (or fears) the future.

P.S. Know someone else who could benefit from a sip of AI wisdom? Share this newsletter and help them brew up stronger customer relationships!

P.P.S. If you found these AI insights valuable, a contribution to the Brew Pot helps keep the future of work brewing.

Resources

Sip smarter, every Tuesday. (Refills are always free!)

Cheers,

Nadina

Host of TechSips with Nadina | Chief Strategy Architect ☕️🍵

Great insights like always, love the sips!