The Cascading Cost of Centralized Failure

Why AI’s Infrastructure is a Matter of Public Trust and Resilience.

"The true measure of a technology isn’t its peak performance, but how gracefully it fails. In public services, a lack of grace can cost lives and trust." — Nadina D. Lisbon

Hello Sip Savants! 👋🏾

The massive AWS cloud outage yesterday, October 20, 2025, crippled services globally. This disruption affected finance and education systems worldwide. It served as a stark, fresh reminder of our critical and fragile dependence on a few tech giants. A DNS issue in a single AWS region caused this event. It highlighted the cascading chaos that a single point of failure can unleash in our interconnected, cloud-powered world. Millions of users were affected, from London banks to US schools [1, 2, 4]. A similar incident occurred last year: the CrowdStrike malfunction disrupted health and business systems [3, 7]. That failure forced manual operations at hospitals and grounded flights. This latest incident raises a crucial question. The UK government is rushing to announce its new AI blueprint today. They promise to “slash waiting times” and “drive growth” for public services [6]. We must weigh this revolutionary promise carefully. We must consider the inherent, human-scale risks of systemic failure and algorithmic bias.

3 Tech Bites

🧬 AI in Life Sciences Surge

AI is booming in drug discovery. This is driven by a projected annual value of up to $410 billion in the pharmaceutical sector by 2025 [5]. Large Language Models (LLMs) like Anthropic’s Claude are being adapted for life sciences [8]. They connect to platforms like Benchling and PubMed. This helps summarize literature and accelerate protocol generation. The goal is to shorten R&D cycles from weeks to hours.

🏛️ UK’s AI Regulation Blueprint

The UK government is pushing a “test and learn” approach for AI in public services. They announced plans for “AI Growth Labs” or “sandboxes” [6]. Here, some regulations are temporarily relaxed for innovation. This covers sectors like the NHS and housing planning. The goal is to speed up AI adoption. However, this strategy demands unwavering oversight. It must ensure fundamental rights and consumer protection are never compromised [6].

☁️ The “Democratic Deficit” of Cloud

The massive AWS cloud outage exposed a key danger. It showed the risk of relying on just a few providers for critical infrastructure [1]. The outage was caused by a DNS issue in the US-East-1 region. Experts warn this over-reliance creates a “democratic deficit.” It puts essential services at the mercy of internal corporate glitches [4]. The ripple effect shows the need for greater diversification and resilience in our digital backbone.

5-Minute Strategy

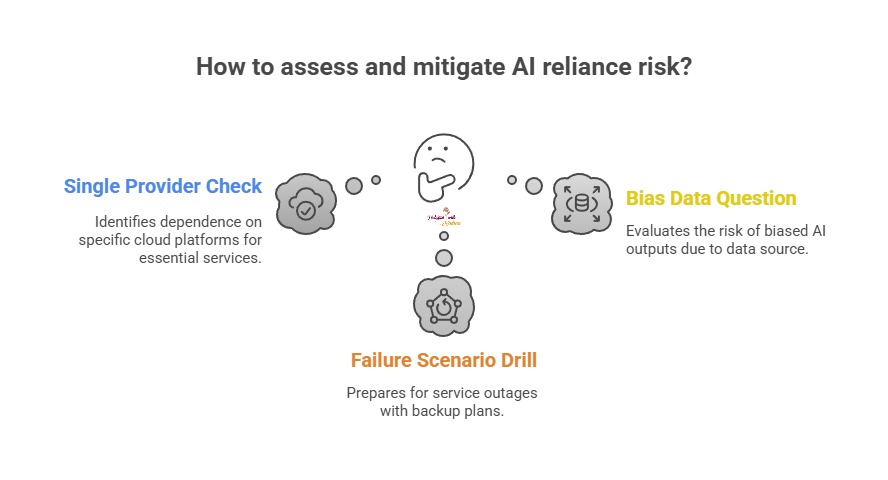

🧠 Audit Your AI Reliance Risk

This quick assessment helps you or your organization map your critical dependence on single-source AI/cloud infrastructure.

The “Single Provider” Check

List the three most essential digital tools/services you use for work or life (e.g., communication, finance, logistics).

What single cloud platform hosts each one? Check the vendor’s status pages (e.g., AWS Health Dashboard [4]).

The “Failure Scenario” Drill

For each service, imagine a 4-hour, unannounced global outage.

What is your immediate human-driven, analog, or pre-digital backup plan? (e.g., using a non-cloud document, calling a backup number, cash for a purchase).

The “Bias Data” Question

If you use an AI tool for high-stakes decisions (e.g., medical pre-screening or resource allocation), do you know the source and diversity of the data it was trained on?

If the data is biased, the output will be, too.

If you can’t get this information, escalate the explainability risk.

1 Big Idea

💡 The Accountability Gap: Who is Liable When the Algorithm Fails?

The rush to automate public services creates a massive legal and ethical vacuum. This is the Accountability Gap. When an AI system fails, harms are no longer confined to a single glitch. The harms become systemic. The recent AWS outage shows how centralized infrastructure can collapse globally [1]. But when a diagnostic AI makes an error, the question is not if the system failed, but who is responsible. Is it the cloud provider? Is it the algorithm developer? Is it the government department that procured the system? Or is it the human professional who blindly followed the recommendation? Total automation erodes clear lines of responsibility.

The “black box” nature of complex AI makes this gap wider. These AI systems are often opaque. The systems are trained on vast datasets that can embed existing societal biases. This is a crucial flaw in healthcare or housing allocation models. If an algorithm suggests unfair treatment, the public cannot simply ask the AI system for an explanation. The UK government’s new regulatory “sandboxes” encourage faster AI deployment in the NHS [6]. This speed cannot come at the cost of legal clarity. Policymakers must know who bears the legal liability when an un-auditable, biased system causes patient harm.

Our current legal and ethical frameworks were designed for human professionals. The frameworks were not built for autonomous, self-learning digital agents. When an AI makes a bad decision, developers claim the system is only a tool. Users claim they followed the output of a certified system. This deflection leaves the injured citizen with no clear path to redress. The failure to define liability creates a culture of diffused responsibility. This weakens the fundamental contract between the citizen and the state.

The goal must be to build a Human Firewall. This means embedding mandatory human oversight at every critical decision point. Regulators must demand transparency and auditability. They must prioritize patient autonomy. The purpose of AI is to augment human intelligence, not to autonomously rule critical decision-making processes. Ignoring this accountability gap risks eroding the public trust required for a just system. The resulting system will be more efficient but far less responsible.

If you’ve ever had a public service or personal device fail spectacularly because of a hidden tech glitch, share this sip! Let’s talk about where tech centralization is taking us. Share your thoughts!

P.S. Know someone else who could benefit from a sip of CRM wisdom? Share this newsletter and help them brew up stronger customer relationships!

P.P.S. If you found these AI insights valuable, a contribution to the Brew Pot helps keep the future of work brewing.

Resources

AWS Outage,December 10th, 2021- Amazon Web Services (AWS) Outage Message.

Microsoft-CrowdStrike Incident. Reference to a prior major systemic IT failure impacting public services.

AWS Outage, October 20th,2025 . Official source for AWS Outage.

Scottish data centres powering AI already using enough water to fill 27 million bottles a year.

AI could save NHS staff 400,000 hours every month, trial finds.

Sip smarter, every Tuesday. (Refills are always free!)

Cheers,

Nadina

Host of TechSips with Nadina | Chief Strategy Architect ☕️🍵

Great read - the AWS outage, along with the previous CrowdStrike outage, are most definitely a warning sign for less monopoly in the cloud space and to spread out infrastructure and responsibilities across multiple vendors. Thanks for providing additional sources as well!