The "AI Factory" Is Now Open

From Google in defense to NVIDIA in manufacturing, AI has moved from a "tool" to an "industrial-scale engine."

"For the last two years, we’ve been test-driving AI. Now, we’re building the assembly line. The ‘factory’ is open, and it’s running on industrial-strength, high-stakes engines." — Nadina D. Lisbon

Hello Sip Savants! 👋🏾

AI’s “what if” phase is over. The “how-to” industrial era has begun. Recent news shows this isn’t just about software; it’s the foundation for a new industrial structure. We’re seeing AI for secure defense [1], “AI factories” for manufacturing [2], and the cloud “power grid” to run it [3]. This is the start of AI’s industrial age, and the engines are just now turning on.

3 Tech Bites

🛡️ The “On-Prem” Engine for National Security

You can’t run national defense on the public cloud. The Lockheed Martin and Google partnership [1] is the perfect example of this new industrial-grade AI. They are moving Google’s AI onto highly secure, private systems that are cut off from the internet. This ensures classified national security data never leaves the building. This is a high-security, specialized ‘engine,’ not a public app, built for a zero-fail mission.

🏭 The “Factory-for-Factories” Engine

It doesn’t get more industrial: Samsung is building an “AI factory” using NVIDIA’s platform [2]. Its mission is to optimize its complex semiconductor manufacturing and, in turn, build better AI chips. This creates a powerful feedback loop: a specialized “engine” with 50,000+ GPUs uses “digital twins” to perfect the chip-making process, all to produce the hardware for other AI engines.

☁️ The “Power Grid” Engine

Most companies can’t afford to build their own multi-billion dollar AI factory. They will need to rent power from one. Cloud providers like AWS are racing to be that power grid. In a massive $38 billion partnership announced just today, AWS will provide OpenAI with hundreds of thousands of state-of-the-art NVIDIA GPUs [3]. This solidifies AWS’s role as the “public utility” for this new industrial age. Everyone else will just plug in.

5-Minute Strategy

🧠 Is Your AI a “Tool” or an “Engine”?

How you manage an AI “tool” is completely different from how you govern an “industrial engine” (like an AI in your supply chain). Use the core functions of the NIST AI Risk Management Framework [4] to see what you’re really building.

GOVERN

Have you assigned accountability? If an AI “tool” fails, it’s an embarrassment. If an AI “engine” fails, it’s a crisis (a factory shutdown, a security breach).

An “engine” requires a formal governance structure, clear ownership, and robust human-in-the-loop (HITL) oversight before it’s deployed [5].

MAP

Have you mapped the risks? For a “tool,” you map reputational risk. For an “engine,” you must map systemic risks:

What happens to your production line if the data is bad? [2]

What happens if classified data leaks? [1]

MEASURE

Do you have “engine-grade” metrics? A “tool” is measured on engagement or time saved. An “engine” must be measured on reliability, safety, and fairness using auditable, quantitative tests.

MANAGE

What’s the “E-Brake”? An industrial “engine” cannot be a “black box.” You must have a clear process to manage, test, and correct it when it fails. If you can’t turn it off or override its decision, you haven’t built an engine; you’ve built a liability.

1 Big Idea

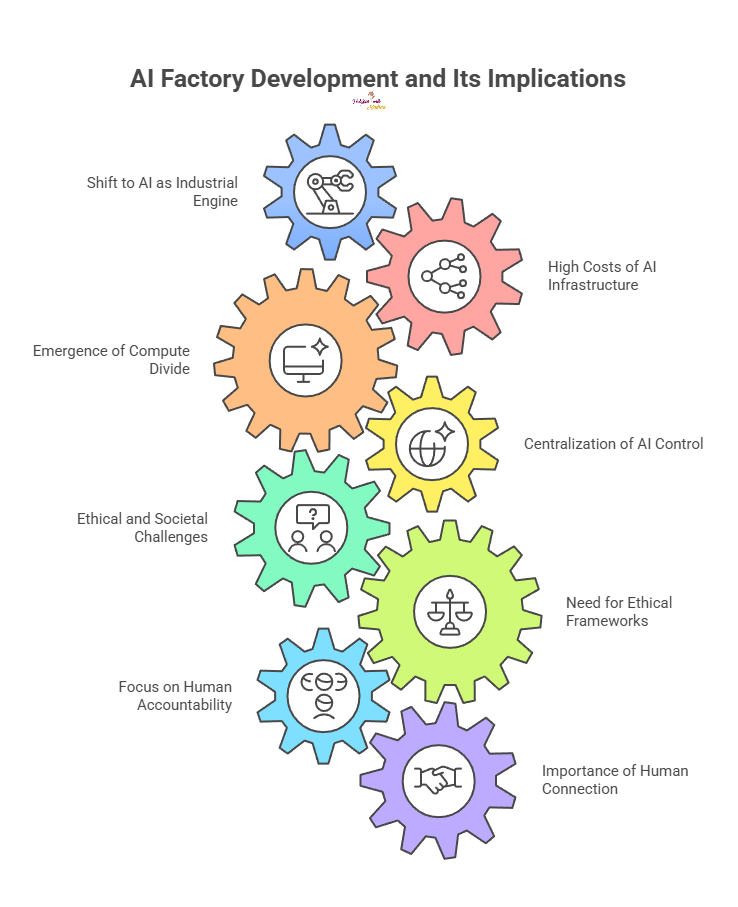

💡 The “Compute Divide”- Who Owns the AI Factories?

This shift from “tool” to “industrial engine” is about more than technology; it’s about the centralization of power. Just like the first Industrial Revolution, this one is being defined by who owns the “means of production.” But today, the means of production isn’t steel or electricity; it’s “compute.”

Building these “AI factories” is astronomically expensive. Enterprise-grade AI projects can cost $500,000 to over $2 million [6], and a single top-tier NVIDIA H100 GPU costs over $30,000 to buy [7]. The portion of companies spending over $100,000 per month on AI more than doubled in the last year [8].

This creates a new “compute divide” [9]. A global split between the handful of trillion-dollar companies and nations that can afford to build the AI factories, and everyone else who will be forced to rent access from them.

Only 16% of nations have large-scale data centers, and US and Chinese companies operate over 90% of the data centers that other institutions use for AI work [9]. This isn’t just a business problem; it’s a profound human-centered challenge. What happens to innovation when only a few giants control the “plumbing”? How do we prevent compute-rich monopolies from dominating the entire market? What happens to global power dynamics when a few corporations control the “on/off switch” for a nation’s intelligence infrastructure? [1]

We are at an inflection point. As we build these powerful “engines,” our human job isn’t to be a cog in the machine. It’s to be the conscience of the machine. Our role is to ask the hard questions before the engines are running at full speed. How do we build in “safety valves,” “public options,” and “labor laws” for this new era?

This is why frameworks like the NIST AI RMF [4] and new regulations like the EU AI Act [5] are so critical. They aren’t just rules; they are the guidelines for building human accountability into the factory floor. The most advanced “tech” won’t save us; the most dedicated humanity will.

All this talk about “engines” and “factories” is a lot. Don’t forget to unplug from the “grid” and connect with a human.

P.S. Know someone else who could benefit from a sip of AI wisdom? Share this newsletter!

P.P.S. If you found these AI insights valuable, a contribution to the Brew Pot helps keep the future of work brewing.

Resources

NIST AI Risk Management Framework: A tl;dr - Wiz (Jan 31, 2025)

Custom AI Solutions Cost Guide 2025: Pricing Insights Revealed - Medium (Mar 31, 2025)

The AI Capital Divide: How 2025–2030 Will Kill Small Business in America - Medium (Oct 21, 2025)

The Global A.I. Divide - Benton Institute for Broadband & Society (Jul 16, 2025)

Sip smarter, every Tuesday. (Refills are always free!)

Cheers,

Nadina

Host of TechSips with Nadina | Chief Strategy Architect ☕️🍵