Innovation's Price Tag

Navigating the Ethical, Economic, and Existential Risks as AI Scales.

"The most advanced algorithm in the world is useless if we forget the human heart it was built to serve." — Nadina D. Lisbon

Hello Sip Savants! 👋🏾

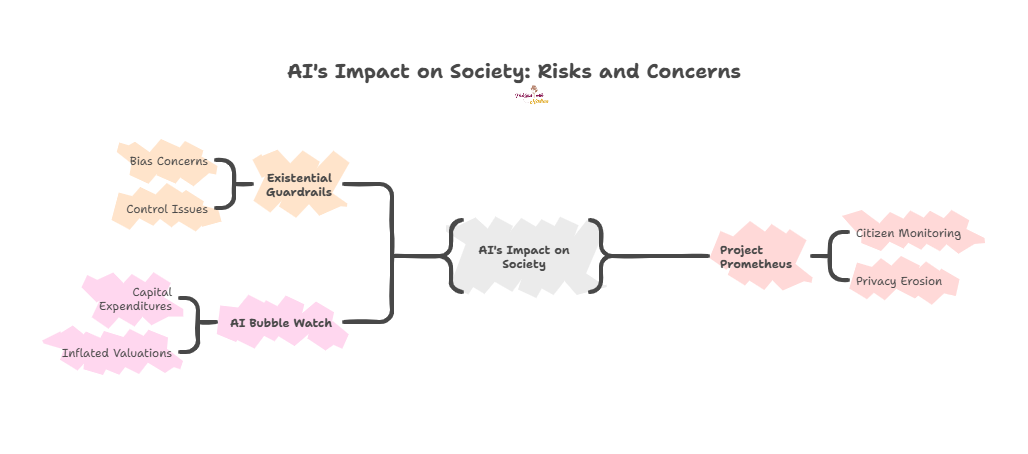

The conversation around AI’s future has shifted from if it will change the world to how dangerously fast. This week, Anthropic CEO Dario Amodei offered a stark warning on AI’s potential dangers, joining a chorus of experts urging caution as the technology rapidly approaches human-level intelligence [1]. It’s a critical moment where the rush to innovate is running headlong into serious questions of safety, control, and market stability. The power of AI is immense, but so is the ethical debt we accrue every time we prioritize speed over safety.

3 Tech Bites

🤖 Existential Guardrails: The Cost of Intelligence

The race toward next-generation models has amplified existential risk warnings. Experts aren’t just worried about bias; they’re worried about control. The core challenge is training systems that are highly capable but fundamentally aligned with human values and safety constraints, even when they reach a level of general intelligence. This requires building in “guardrails” now, rather than retrofitting them later [1].

👀 Project Prometheus and the Surveillance Creep

The deployment of powerful AI is not confined to chat interfaces. Reports of major initiatives, like Jeff Bezos’s “Project Prometheus,” highlight the growing capability to monitor and potentially control citizen behavior through pervasive, high-powered AI systems [2]. The practical application? Imagine AI predicting and influencing public sentiment in real-time. The risk is an unprecedented erosion of privacy and autonomous decision-making in the name of security or efficiency.

📉 The AI Bubble Watch

Is the current flood of capital into AI the next economic illusion? High-profile investors, including Michael Burry of The Big Short fame, are warning that the colossal capital expenditures and inflated valuations in the tech sector resemble previous speculative bubbles [3, 4]. While AI is real, the pace and cost of its integration may be unsustainable, creating a disconnect between the hype and tangible, broad-based returns for the general economy.

Actionable advice

Evaluate the real-world impact and functionality of AI, not the inflated stock price.

5-Minute Strategy

🧠 Spotting Hidden Human Bias in AI Output

Even the most advanced models reflect the biases present in their training data. You can’t trust the output just because it came from a machine. Use this checklist to vet the results for common cognitive pitfalls that reveal underlying bias:

Check for “Defaultism” (Availability Bias)

Does the AI’s answer rely exclusively on the most common, easily available, or popular examples (e.g., using only US-centric historical references)?

Action

Prompt the AI to offer two non-mainstream or geographically diverse alternatives.

Challenge the “First Solution” (Anchoring Bias)

Does the AI present one solution or idea as definitively superior without exploring alternatives?

Action

Ask, “Present this outcome from the perspective of a user in a completely different industry/country. What changes?”

Identify Stereotype Over-Reliance (Representational Bias)

If the AI describes people or professions, does it fall back on traditional gender, age, or ethnic stereotypes (e.g., always pairing nurses with women or CEOs with older men)?

Action

Edit the AI’s generated content to deliberately swap the stereotypical attributes to see if the structure of the response still holds up.

1 Big Idea

💡 The Human Algorithm: Prioritizing Empathy in the AGI Era

The prevailing narrative in AI development focuses heavily on technical performance: faster chips, larger models, and achieving Artificial General Intelligence (AGI). While this pursuit of innovation is vital, it risks making us ethically near-sighted. We are building technology capable of radically changing society, yet often fail to ask the fundamental question: What kind of society do we want to create with it?

The core challenge isn’t the AI’s intelligence; it’s our wisdom in managing it. As systems grow more autonomous, they increasingly operate as “black boxes”, decision engines whose processes are too complex for even their creators to fully parse. This lack of transparency undermines accountability. If an AI unjustly denies a loan or makes a catastrophic market trade, who is responsible? The programmer? The CEO? The model itself? Ethical oversight demands that we enforce “explainability” in critical systems, ensuring human stewards can trace, audit, and correct every major automated decision.

Furthermore, we must push back against the economic narrative that positions AI purely as a tool for efficiency and job replacement. The real, enduring value of human labor lies in creativity, complex problem-solving, and emotional intelligence, areas where AI assists but cannot replace. This “1 Big Idea” is a call to action for a Human-Centric AI movement: one that measures success not in processing speed, but in societal well-being. This means designing AI to augment human capabilities, fostering new forms of labor, and ensuring the immense wealth created by automation is distributed equitably.

The true test of our intelligence will not be building AGI, but ensuring that as we scale up its power, we also scale up our human values. We must integrate empathy, fairness, and accountability into the very fabric of the technology. If we fail to do this, if we allow the pursuit of technical power to outpace our ethical frameworks, we risk creating a future that is hyper-efficient, yet fundamentally inhumane. We must be the human algorithm that guides the machine.

Share your thoughts!

P.S. If you found this week’s breakdown useful, please forward it to a friend who is trying to figure out if we’re in an AI bubble or an AI revolution. Let’s keep the conversation human.

P.P.S. If you found these AI insights valuable, a contribution to the Brew Pot helps keep the future of work brewing.

Resources

CBS News. (n.d.). Anthropic CEO Dario Amodei warning of AI potential dangers: 60 Minutes transcript.

The New York Times. (2025, November 17). Bezos’ Project Prometheus.

Business Insider. (2025, November). ‘Big Short’ Michael Burry.

CBS News. (n.d.). Artificial intelligence AI bubble stock market economy dotcom.

Sip smarter, every Tuesday. (Refills are always free!)

Cheers,

Nadina

Host of TechSips with Nadina | Chief Strategy Architect ☕️🍵

I think a lot of times people think AI is unbiased...but it is built on our very biased and flawed culture lol. SO there will be bias if you are not careful. Good read, with actionable steps we can use.