AI’s Dual-Speed Future

The clash between AI's massive economic boom and the human cost of its flawed, real-world systems.

"We’re rightfully impressed by the speed of AI, but we must be far more concerned with its direction. A faster future is not better if it’s not a fair one." — Nadina D. Lisbon

Hello Sip Savants! 👋🏾

The whiplash from the AI headlines this past week says it all. A couple of weeks ago, we discussed the “Foundation of the Agentic Era”[4]. Now, we’re seeing the real-world consequences of that era arriving with stunning speed. In one breath, we’re told that AI infrastructure spending is now a primary driver of the US economy, adding a full percentage point to GDP growth[2]. In the very next, we read the shocking story of a student being handcuffed because an AI-powered surveillance system mistook his bag of chips for a weapon[3]. This isn’t just a paradox; it’s the central challenge of 2025. We are funding the technology at light speed, but we are failing to fund the humanity (the safety, oversight, and ethical guardrails) to match. It’s time we bridge that gap.

3 Tech Bites

💰 AI as an Economic Engine

AI spending is now a key driver of the U.S. economy. Massive investment in data centers and chips added an estimated 1.1 percentage points to GDP growth in the first half of 2025[2]. This spending is covering up slowdowns in other sectors. Our economy is relying on the construction of AI, but the productivity benefits from using the tech have not yet arrived[2].

🤖 The “Agentic Era” Arrives

As we’ve discussed, agentic AI is about taking action, not just creating content. The new development is how quickly this is being deployed. The recent “Agentic AI and the Student Experience” conference at ASU explored using these systems as proactive “equalizers” for tasks like personalized student advising and complex admin work[1]. This rapid shift toward autonomous AI demands more trust, ethical design, and human supervision[1].

🚨 A Costly AI Mistake

A 16-year-old student in Baltimore, Maryland, was handcuffed by armed police in a terrifying incident. A school AI surveillance system mistook his bag of Doritos for a firearm[3]. The system is designed to detect guns but flagged the snack bag as a threat. School staff canceled the alert, but police were already sent. This event is a stark reminder that AI lacks human understanding. Its “false positive” errors can cause real-world trauma[3].

5-Minute Strategy

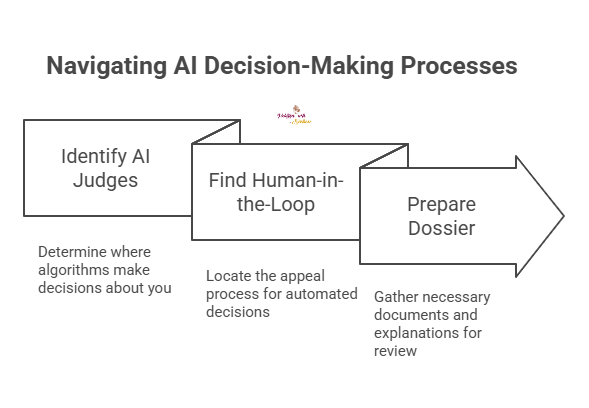

🧠 Audit Your Algorithmic “Single Points of Failure”

The “Doritos” incident[3] is an extreme example of an AI “false positive.” More common failures happen silently in our inboxes and applications. Use these 5 minutes to find where an automated decision could block you and plan your human-centric appeal.

Identify Your AI “Judges”

Where does an algorithm make a decision about you?

Hiring: Is your résumé being fed into an Applicant Tracking System (ATS)?

Finance: Is your loan or credit card application decided instantly by a bot?

Content: Is your work (social posts, ads, videos) subject to automated content moderation?

Find the “Human-in-the-Loop”

For each “judge,” find the appeal process.

If you get an automated rejection (job, loan): Do not accept it. Immediately call or email to “request a human review” of your application.

If your content is flagged: Do not just delete it. Use the official “appeal” button. This almost always forces a person to review the AI’s mistake.

Prepare Your “Dossier”

You can’t argue with an algorithm, but you can give a human reviewer the context the AI missed.

For a job: Maintain a ‘Core’ résumé that isn’t optimized for keywords but is clear for a human. Have it ready to send.

For a loan: Have your recent pay stubs and a short explanation for any anomalies in your file (e.g., a late payment) ready.

For content: Keep a simple text file with your sources or an explanation of your video’s context.

The goal is to never let a “no” from a bot be the final answer. Always be ready to escalate to a human.

1 Big Idea

💡 Redirecting the Boom: From Raw Power to Human Context

We are living in an era of profound AI imbalance. On one side, we have the “fast” track: a multi-billion dollar infrastructure boom that is single-handedly propping up economic growth[2]. This side is measured in dollars, data centers, and GDP points. On the other side, we have the “slow” track: the human consequence. This side is measured in trauma, bias, and the terrifying real-world failure of a student handcuffed at gunpoint over a bag of chips[3]. These are not two separate stories. They are the direct result of a “dual-speed” system where our investment in raw computational power has dangerously outpaced our investment in wisdom, oversight, and human context.

This is the very challenge of the ‘Agentic Era’ I wrote about[4]: the stakes rise exponentially when AI moves from suggesting to acting. As the ASU conference highlighted, the goal is to deploy agents that act and decide autonomously[1]. The promise is incredible: a personalized AI “equalizer” for every student[1]. But the peril is the “Doritos incident” at scale. When an autonomous agent in a school security system is empowered to act, its bias is no longer a statistical flaw; it’s a 911 call. When an agent in a bank is empowered to decide, its flaw is a loan denial that ruins a family’s dream.

This creates a massive accountability crisis. In the Baltimore incident, the student was left traumatized while the school, police, and AI vendor pointed to “protocol”[3]. The system worked as designed, but the design was wrong. The Big Idea is that we must stop treating safety and ethics as a PR checklist and start treating them as a core engineering and investment priority. The “boom” isn’t the problem, but its direction is. We must use this massive wave of capital to fund the “slow” track: to build robust “Human-in-the-Loop” systems, to mandate algorithmic transparency, and to establish a legal “right to human review” for any high-stakes automated decision.

Ultimately, we have to answer a fundamental question: what is all this technology for? Is the goal simply to boost GDP and optimize processes, or is it to augment human capability and build a safer, more equitable society? The “chips-as-a-weapon” incident[3] is the perfect, tragic summary of our current imbalance. We have successfully built a system with superhuman perception but no human common sense. The only way to fix this is to re-center the human, not as a user to be managed, but as the ultimate authority to be served.

Share your thoughts!

P.S. Know someone else who could benefit from a sip of AI wisdom? Share this newsletter and let’s make sure our conversations about tech are grounded in practical reality and human values!

P.P.S. If you found these AI insights valuable, a contribution to the Brew Pot helps keep the future of work brewing.

Resources

Lisbon, Nadina. “The Foundation of the Agentic Era.”

Sip smarter, every Tuesday. (Refills are always free!)

Cheers,

Nadina

Host of TechSips with Nadina | Chief Strategy Architect ☕️🍵

Great content!!